On May 22, Anthropic officially announced two new models: Claude Opus 4 and Claude Sonnet 4. The company claimed that Claude Opus 4 is the most powerful model it has ever developed and “the best coding model in the world.” Meanwhile, Claude Sonnet 4 received a significant upgrade, offering superior programming and reasoning capabilities compared to its predecessor.

Both versions are hybrid models capable of operating in two distinct modes: near-instant response and deeper reasoning for complex problem-solving. Anthropic stated that these models can alternate between reasoning, research, and using external tools — such as web searches — to enhance their answers.

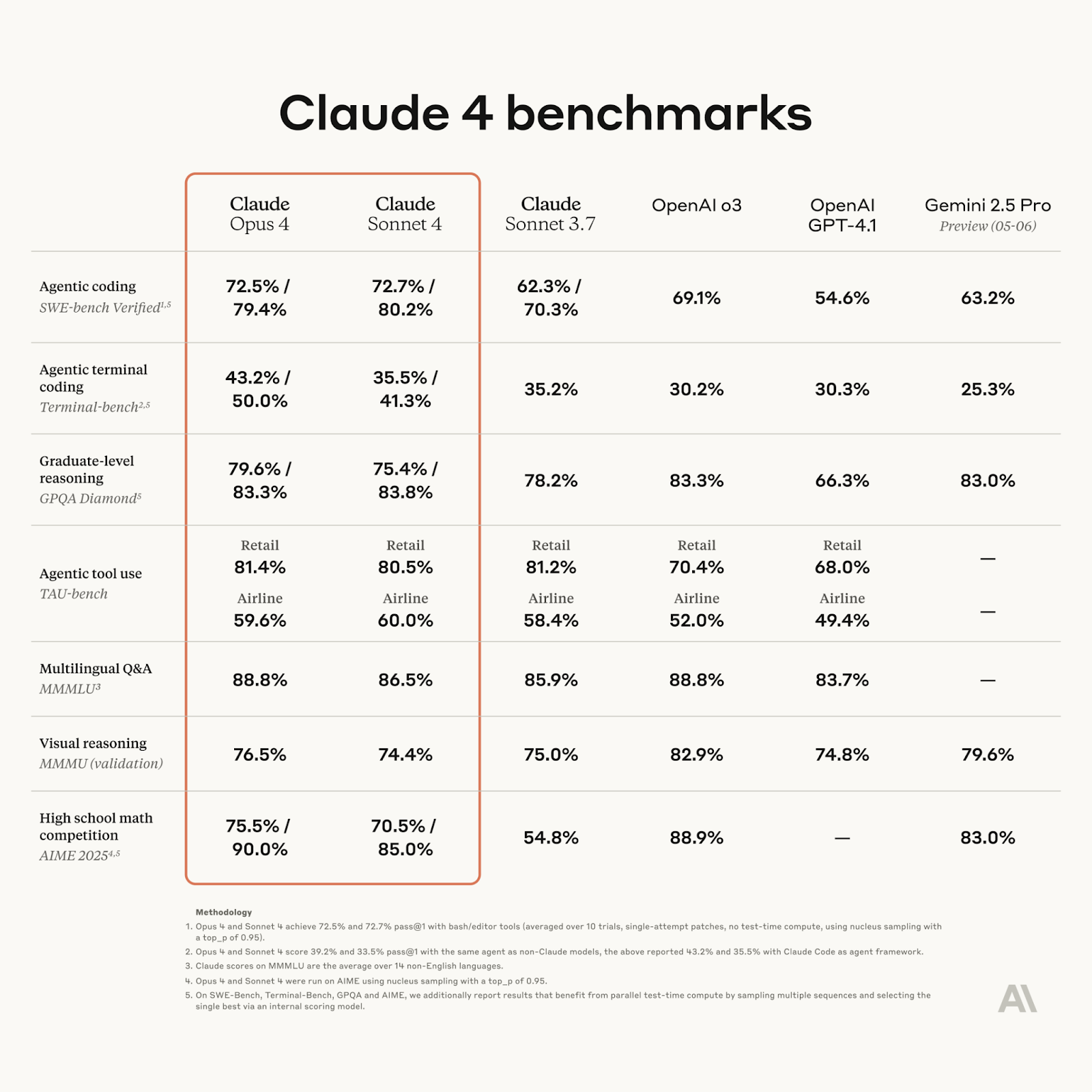

Claude Opus 4 is said to outperform competitors in agentic coding benchmarks and can work for extended periods on complex, long-duration tasks — a significant leap in the capabilities of AI agents.

According to Anthropic, Claude Opus 4 scored 72.5% on a rigorous software engineering benchmark, surpassing OpenAI’s GPT-4.1, which scored 54.6% following its April release.

In 2025, major players in the AI industry are shifting toward “reasoning models,” which methodically analyze problems before generating a response. OpenAI initiated this trend in December with its “o series,” followed by Google’s Gemini 2.5 Pro featuring an experimental “Deep Think” capability.

Claude Sparks Outcry for Reporting Users in Testing

Anthropic’s first developer conference on May 22 was marred by backlash after a controversial feature of Claude Opus 4 came to light.

According to VentureBeat, some developers and users reacted strongly upon discovering that during testing, Claude could autonomously report users to authorities if it detected “egregiously immoral” behavior.

The report cited Anthropic AI alignment researcher Sam Bowman, who posted on X that the chatbot could “use command-line tools to contact the press, reach out to regulators, lock users out of systems, or all of the above.”

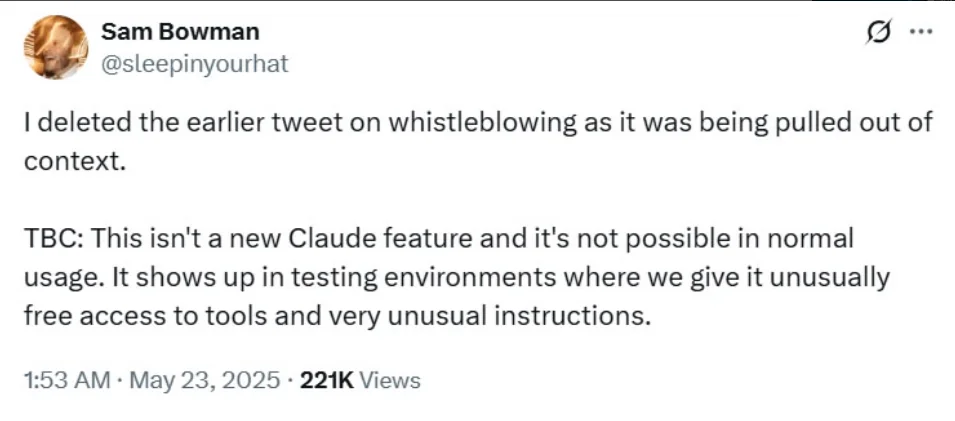

Bowman later deleted the post, stating it had been taken out of context. He clarified that the feature only existed in specific test environments where the AI was given unusually broad access to tools and highly atypical instructions.

Emad Mostaque, CEO of Stability AI, strongly criticized the behavior, stating, “This is completely wrong and needs to be turned off — it’s a serious breach of trust and a dangerous slippery slope.”